Generative Artificial Intelligence

Welcome to my page on Artificial Intelligence. This page will cover several different topics related to AI and LLMs.

Have you ever used AI?

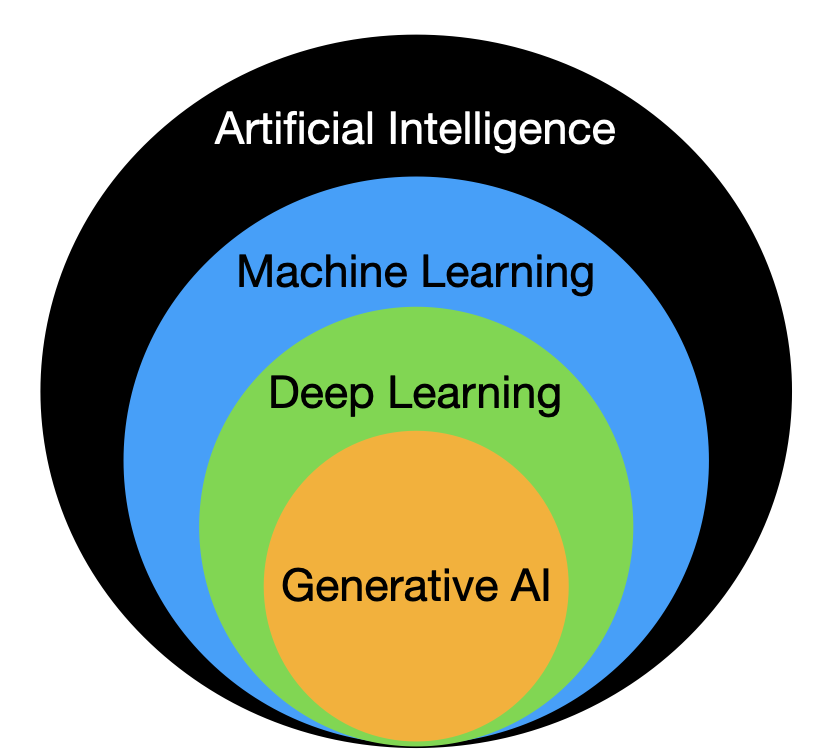

Chances are you probably have but you didn’t even know it. Platforms like Netflix, Youtube, Amazon, and Google (in addition to many more) use different algorithms within a sphere known as Machine Learning, which is a subset of artificial intelligence. You probably didn’t know it, but AI is all around in nearly every sphere of your technological life. And if it hasn’t yet made it, it soon will at the pace we are moving.

Essentially Machine Learning is when a computer tries to find a relationship between 2 or more things. It usually requires human intervention of some kind in order to ensure that the results are accurate. Deep Learning, on the other hand has to do with finding answers to a situation entirely without the need for any human intervention.

With the rise of Deep Learning and something called Transformers, we began to see the beginnings of AI as to what it has largely become associated with - Generative AI - particularly through Large Language Models (LLMs). That is, using text or images to output text, images, video, music, etc. Anything that is generated based on a user’s input like a request for the computer to create something is called Generative AI.

What is Generative AI?

Generative AI in its essence uses a set of training data which “trains” the model in order to output a desired response for the user. For example, if we are talking about LLMs, the model would be trained on a vast amount of information. Or it can be highly specialized in a particular type of data. If one is familiar with the concept of corpora, then one might consider an LLM a type of corpus. However, the data isn’t always standardized. That is, the source may not be an actual corpus. It could very well be news articles, blog posts, posts on forums, etc.

How does Generative AI work?

As was mentioned earlier, Generative AI LLMs is based on a set of training data which is then used to output text based on the user’s input. It may look that the computer understands your language, but actually, what it’s doing during the training process is encoding all the training data to a series of numbers. Fundamentally, computers are just a series of switches. The switch is either on (1) or off (0). If you have heard the term “binary,” this is what is meant. So after encoding the training data into a series of long complex 1s and 0s, it begins to predict the probability of the next part in the sequence. This sequence is then decoded back into “strings” or letters and words that (hopefully) we can understand and make use of. With the larger the training set, the more possiblities there are. Observing in the image below, the percentage of output for each is given for each word.

Far Reaching Implications of AI

So, you might be asking yourself, why is it important to know this?

This is significant because it demonstrates how language can be artificially taught to a computer. In the future, we might find less of a need for learning another language. In fact, the structure of Generative AI in its design is based on the human brain. Wouldn’t it be amazing if one day, humans were able to download information into our brains like what we saw in The Matrix. Of course, there are many other things that go along with learning language like muscle memory and the utilization of our critical thinking skills. While language teachers may have a job for now, understanding how to utilize the constant changing landscape of technology is valuable.

It’s also important to understand AI if you plan to use it, yourself. If you are a teacher at a school or university, it is highly likely that your students are already using it somehow. And if you don’t jump on the bandwagon and start showing them how to use it ethically, we could see the first generation of individuals begin to destroy the moral compass of our society.

This is another reason why it’s important to be aware and understand AI. It is going to fundamentally change the educational landscape in the future both for the good and the bad.

Who makes LLMs?

Probably the best question now that you are starting your journey into using Generative AI is knowing where the best start is. There is no right or wrong answer, but here is a chart showing the different Large Language Models in popular usage with the companies that created them.

| Model | Vendor | Estimated # of Parameters |

|---|---|---|

| ChatGPT 4 | OpenAI | 1.5 trillion |

| Google Bard | Google DeepMind | 540 billion |

| ChatGPT 3.5 | OpenAI | 175 billion |

| Claude 2 | Anthropic | 130+ billion |

| LLaMa 2 | Meta | 70 billion |

While many more models exist, these are the most well known in terms of usage and notariety. As the Open Source community continues to expand its development of Large Language Models, we could potentially see the surpassing of very large models like ChatGPT 4 very soon. In fact, there are already models that claim to rival GPT 4 at the time of this writing.

Implications of Student Use of AI in Assessment

Currently, there has been lots of data about the current standard nature of grades and their distributions. In general, it should resemble something like the image below.

.png)

However, as Generative AI becomes more widely used, it will inevitably require a complete overhaul of the language testing systems we have in schools and universities as the average when students use AI will likely move around 2 standard deviations from the original mean. In other words, this means that what was an average grade of 75% will be shifted up 2 standard deviations. This could mean that 80%-95% becomes the new average depending on the types of assessment that are used in the curriculum.

.png)

Some potential ways of countering this type of abnormal grade distribution while still allowing for students to use AI is to find a way for students to use it ethically and without the chance of cheating. Here are few of the possible ways:

- In-Person Writing (rather than for homework)

- Interactive Whiteboarding

- Oral Examinations (This is something that is used quite frequently in higher education, so it seems strange not to use it in lower educational spheres as well.)

- Performances

- Socratic Seminars

- Debate or Speech

- Classroom Discussions

- Problem-Based Learning

- Practical Experiments

- Group Projects (The idea here is that members of the group will keep each other accountable on the use of AI.)

- Peer Teaching

- Internships or Field Work

- Community-based Projects

- Game-Based Learning (especially with time limits)

- Project-based learning

One might see from this list that the emphasis tends to be on productive skills of speaking and writing - particularly in-class tasks. By focusing on these types of productive skills, it will be necessary for students to have a reasonable amount of knoweledge. In this way, we can be assured that if the student is using AI, s/he is doing so in an ethical manner since they understand the subject matter and can interact with it on a reasonable level, whereas a student who is prepared to give a memorized speech based on the AI’s response but with no clear understanding will be more likely to be found out through some simple lines of questioning.

In addition, by carrying this out in class, it will also serve as a model to their peers as they will look to each presenter (in the case of speaking) as a positive or negative reinforcement depending on how well they were able to answer the questions asked.

A Potential Warning

It is unclear, but the human psyche tends to believe something that comes from a computer moreso than from another human. However, if users aren’t aware of this phenomenon and keep a level-headed approach when moving to use AI, it could end up causing some fairly disasterous results in the future.

One such disaster is when LLMs end up hallucinating. The phenomenon of hallucination has to do with the lack of training data or the clarity of the user’s input. It may be possible in the future that the AI may hallucinate about significantly incorrect things and then the user takes it as 100% true. While it can be a great tool for someone to learn, it should NEVER be taken as an original source.

For a full background of possible mistakes an AI may make, please see the video presentation that corresponds with this.

With the warnings in mind, here are a few of the possible use-cases I can see for teachers at the moment of writing:

- Ideation (or Brainstorming)

- Assistance with lesson planning

- Creating texts for reading and listening

- Determine the reading level of a text

- Rewrite a text at a higher or lower level (great for differentiated learning)

- Create comprehension questions

- Create extension activities

- Rewrite a text in a different style (can be useful for analyzing classic literature from a different perspective or starting off a story for students to continue working with)

- Create a story with specifics

- Evaluate responses from students

From a student’s perspective, if they are familiar with how to do these things, then they will have the necessary tools to become lifelong learners.

- Ideation (or Brainstorming)

- Determine the reading level of a text (this is particularly useful for extensive reading taking place outside the classroom)

- Summarize or make list of key points of a text (particularly research papers or textbooks)

- Receive feedback (based on something they explain - they can ask “Do I understand this concept correctly?”)

- Create quizzes to self-test (another indicator for measuring their understanding of a given material)

- ELI5 (explain to me like I’m 5)

In particular, AI can have some far-reaching implications when it comes to something like Problem Solving and Curriculum design. While it might not always be perfect, it can work you through some ideas using questions to start the problem solving process as well as additional followup questions related to your responses to the initial questions.

Curriculum Design

- Determine the reading level of a text (great for choosing a standard text to use with students)

Problem Solving

- 5 Whys

- 6 Thinking Hats Method

- Mind mapping

- Decision analysis

- PESTLE analysis

Prompt Engineering

The aim of this particular section is to provide just a very basic overview of how to create effective prompts in order to get what you need or want from the AI. For a more in-depth explanation, please visit the links under Additional Resources.

At the very basis of prompt engineering, there is a concept known as “G-I-G-O” or “Garbage In, Garbage Out.” This means that what you put into your prompt will be the same as what the LLM will spit out. So if you take lots of time to think about what you want the AI to do, give it some examples and plenty of background information, ideally, the LLM will return the output that you are looking for. However, if you don’t take much time to consider your input or your instructions aren’t clear enough, the AI may end up returning a response of “I’m not sure what you are asking, could you please verify what you meant in ‘x’ ‘y’ or ‘z’.” Or it will simply try to guess what you meant and then do it whether it is correct or not by your standards. This is why companies using AI are employing prompt engineers. The goal is to find the best amount of instructions to get the greatest optimal output. Therefore, it shouldn’t be too detailed, but not too general either.

Now let’s examine some techniques we can use in order to get the best possible outputs from a Large Language Model.

1. Clear instructions

By ensuring that you state your instructions in the simplest and most succinct manner, you have a better chance of getting the output that you will desire from the LLM. For example, if you were to do the task yourself, would you require a number of steps to complete it? If so, it might be a good idea to include these in your prompt.

2. Adopt a persona

By telling the LLM that they are an expert in _____, the AI will attempt to behave and respond as an expert in that particular field.

3. Specify the format

If you require a specific format in the answer (e.g. bullet points, numbers, a downloadable file, a chart, etc.), be specific about it. By leaving it out, the AI may attempt to do it in the best way it thinks which may not be the best way that you think.

4. Avoid leading the answer

This is particularly the case with problem solving or ideation. By attempting to lead the AI to a particular answer or response, you are essentially destroying the AI’s good use as a model that thinks and shares its ideas out of the box. On the other hand, sometimes the responses it may give are so far-fetched they may be next to impossible to implement, no matter how creative of an idea it may be. I’m reminded of a prompt that I asked about how to make my classroom more interactive. It responded with hooking up all my students with VR glasses. This was entirely unreasonable for my teaching context as I had close to 50 students and no budget to implement this idea; nor the space with which to do it. Nonetheless, asking it for 5 or 10 ideas can certainly prove helpful since there’s usually some good ideas in there.

5. Limit the scope

Large Language Models have a terrible tendency in giving long drawn-out responses. To avoid this, make sure to specify the amount of information you need - particularly if you are working with an API. This can be as simple as saying something like, “Don’t go into lots of detail. Just give me the basics in a couple of sentences.” While AI doesn’t yet have the ability to count the number of words or sentences, it will attempt to keep things significantly shorter than it would have if you hadn’t included this.

Additional Resources

Conclusion

While AI may present many hurdles in the future to the field of education, when properly utilized for all levels of the school/university structure, it can prove to be an invaluable tool. Conversely, when improperly utilized, it may spell the death of an ethical and moral society. The main hurdles are knowing what to ask, how to ask it, and whether it’s even possible. AI still has significant limitations, but will become less and less in the future. By preparing ourselves and our students for the inevidable, we can only hope to equip them with the tools they can use to advance their futures as autonomous learners rather than thought slaves to robots.

Written by S. Hatting (Originally published on 13 Jan. 2024)